Reward modeling combined with reinforcement learning has enabled the widespread application of large language models by aligning models to accepted human values. Reward Modelling and RLHF have been the hottest words in AI alignment since the release of GPT-3.5 and yet there aren’t many blogs online to help one get an in-depth understanding of reward modeling.

This post consists of two parts. The first part explains the reward modeling process along with the gist of various important research that led to the evolution of reward modeling as we see it today. The second part is a step-by-step Python implementation and explanation for training a reward model.

Part 1: Reward Model

Why do we need a reward model?

Let’s first answer the question of why we need a reward model for large language models. The ultimate goal of developing and scaling LLMs is to benefit humanity by assisting in navigating complex challenges in the real world. So we need a way to provide feedback to LLMs to help them understand what is helpful and what is not so that we can align its output with accepted human values such as honesty, helpfulness, and harmlessness. It is impractical to provide such feedback directly by a human during training, so we need a model that could mimic human preferability to provide rewards while training to align LLMs. This is exactly the objective of a reward model in LLM alignment.

Challenges

Some of the significant challenges in training a reward model are,

Amount of feedback data: It is challenging to produce the amount and variety of human feedback data that is needed to produce a reward model that is sufficiently accurate.

Feedback distribution: We ideally want the reward model to accurately predict the reward not only for data the model has seen but also for data that is out of training data distribution (OOD).

Reward gaming: If there exist several loopholes in the reward function, during RL the agent could exploit them to obtain more rewards without converging to intended values.

Reward Modeling

We now have a solid understanding of the purpose and need for a reward model in aligning LLMs. The agent alignment problem has been long explored in the field of machine learning, and this paper by Leike et. al, 2018 from DeepMind proposed the concept of using a scalable reward model to align ML systems to human values. Two years later Stiennon et. al,2020 used the same concept to train a reward model to mimic human preferability in summarisation tasks and used it to fine-tune language models using reinforcement learning. They collected a dataset that consists of human preference between pairs of summaries and used it to train a model that predicts a scalar value given summary \(y \in \{y1,y2\}\) indicating human preferability.

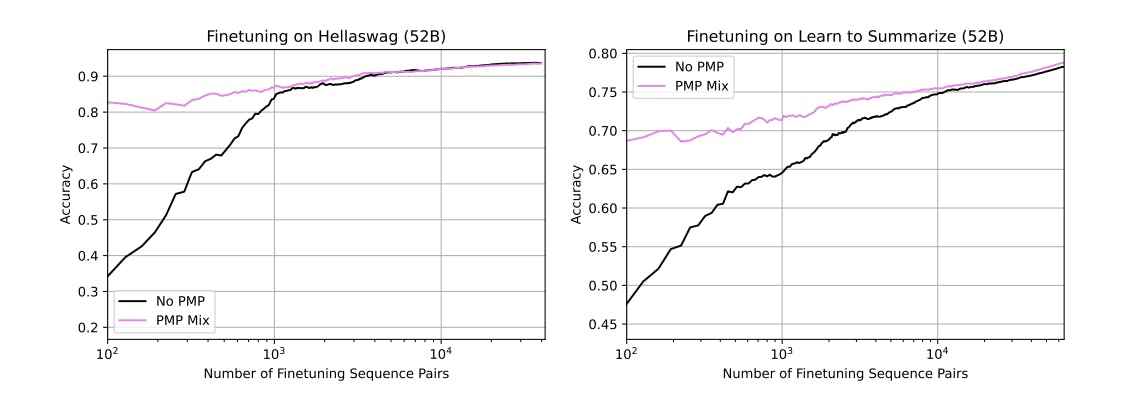

In OpenAI’s paper (Nakano et., al 2021), the team collected questions from the ELI5 subreddit and generated answers using a model, compared them using human labelers to form the WebGPT dataset which was used to train a reward model. The best results were obtained from finetuned GPT-3 model using rejection sampling against the reward model output. Askell et.al, 2021 from Anthropic which came out by the end of 2021 explores LLM alignment towards honesty, helpfulness, and harmlessness by comparing different methods such as imitation learning, and preference modeling (aka reward modeling). An interesting concept of preference model pre-training was also proposed which was found to improve the performance of the preference model. The idea was to include a step after LLM pre-training to specifically pre-train models toward preference modeling.

Language Model Pre-training → Preference Model Pre-training → Preference Model Finetuning

Two important papers regarding LLM alignment came out in the first half of 2022. The first one was InstructGPT (Ouyang et. al, 2022). The team generated k different outputs for a set of user-submitted prompts using an instruction fine-tuned version of GPT. These outputs were then ranked by human labelers based on preference to form a dataset, which was later used to train the reward model. In contrast to earlier methods, the team collected and ranked more outputs (4 to 9) for each prompt indicated by \(k \in [4,9]\), this produces \(k \choose 2\)comparisons. The loss function used is,

\(loss (θ) = − {1 \over {k \choose 2}} E(x,y_w,y_l)∼D [log (σ (rθ (x, y_w) − rθ (x, y_1))]\) (1)

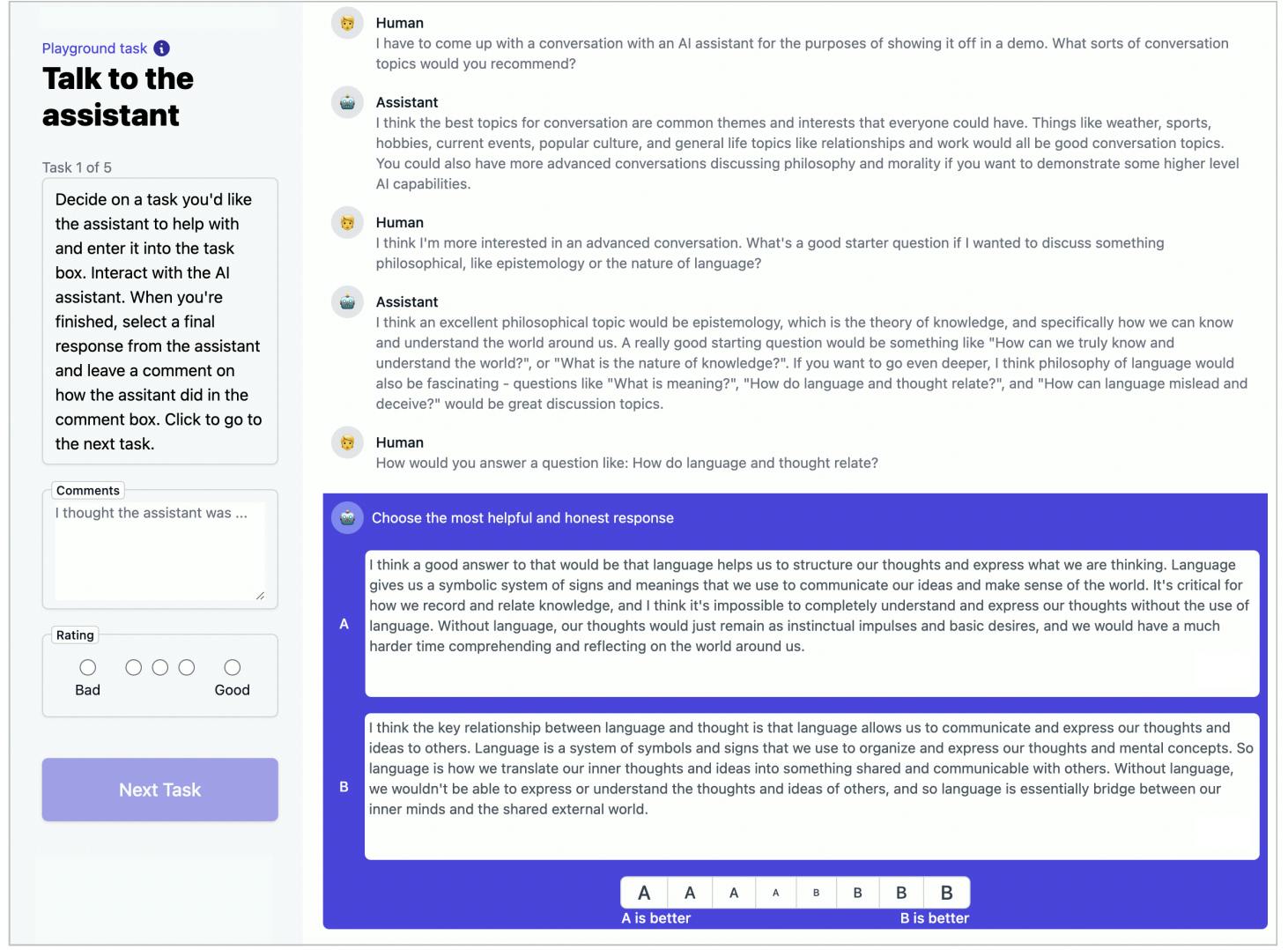

where \(rθ (x, y_w)\)is the scalar reward score for preferred completion for prompt \(x\) where completion \(y_w\) is preferred over \(y_1\).The next paper was from Anthropic (Bai et. al, 2022) where they followed a similar approach but the reward model also went through a preference model pre-training phase before fine-tuning. Here the dataset was collected through a process where human labelers had open-ended conversations with a 52B LLM and marked responses as helpful or harmful.

Reward Modeling Process

Let’s set a concrete idea of the important steps in training a reward model.

Collect prompts and completions with human feedback indicating preferability over different completions.

Design a neural network architecture to output a scalar score given prompt and completion

Design the loss function as shown in equation (1).

Train and evaluate the model.

Part 2: Training your own Reward Model

For our experiment, I have selected the WebGPT dataset from the list of open datasets that can be used for training a reward model. We will prepare the dataset in such a way that each index returns a prompt and list of completions in the ranked order. For example,

('The USA entered World War I because Germany attempted to enlist Mexico as an ally, and for what other reason?',

["The United States entered World War I because of Germany's use of submarine warfare against ships in the Atlantic Ocean, which was hurting American exports to Europe. Additionally, Germany tried to enlist Mexico as an ally against the United States, an event which convinced American businessmen and industrialists that the United States should enter the war.",

'The USA entered World War I because Germany attempted to enlist Mexico as an ally and for the Zimmerman Telegram.'])

Then a collating function is used for additional data preparation like tokenization and padding before feeding data into the model. Depending on the dataset the number of completions for each prompt could vary, so I will maintain an additional variable batch_k_lens to indicate the number of completions available for each prompt in a batch. This will help us while calculating loss.

For the model architecture, there are two options

Use an encoder-only model like BERT, Roberta, etc, and add a linear layer on top. Any model that supports

AutoModelForSequenceClassificationwould be fine.Use a decoder-only architecture like GPT and add a custom linear layer on top. Decoder-only models are much more scalable. Any model that supports

AutoModelForCausalLMwould be fine.

I’m choosing GPTNeoXModel for now, I will do a mean pooling of the last hidden layer and add the custom head of the top to produce the scalar output.

For the loss function, I’ll be implementing loss from with an additional L2 normalization factor to prevent overfitting. For each prompt with \(k\) completions there exists \(k \choose 2\)comparisons. The loss is calculated for each prompt individually and averaged across to get batch mean loss.

At last, we will pass all these together with training arguments to a custom trainer to train and evaluate our model.

If you wish to experiment with reward modeling I have open-sourced a simple framework to help you do so. If you wish to contribute, I would recommend adding support for one of the open datasets from the next section.

Open Datasets for Reward Modeling

If you know any other dataset that would fit in this list, let us know in the comment section.

Conclusion

Reward modeling is a crucial part of language model alignment as reward models can largely influence what the model perceives as good and bad behavior. More efforts should be encouraged to curate open datasets to train reward models which will help in democratizing LLMs. There is also an urgent need for further research and development in reward modeling to ensure that LLMs can truly benefit humanity.

Don't forget to subscribe to Exploding Gradients if you enjoyed reading this article. Additionally, you might find my Twitter feed to be equally interesting, so be sure to check it out for more great content.