In recent times, we have witnessed a significant surge in the development and usage of open-source large language models and projects based on them. Remarkably, some of these models, after fine-tuning, can even match the performance of OpenAI's GPT 3.5 models. This blog aims to provide an overview of all the freely available large language models and essential details that one should be aware of while working with them.

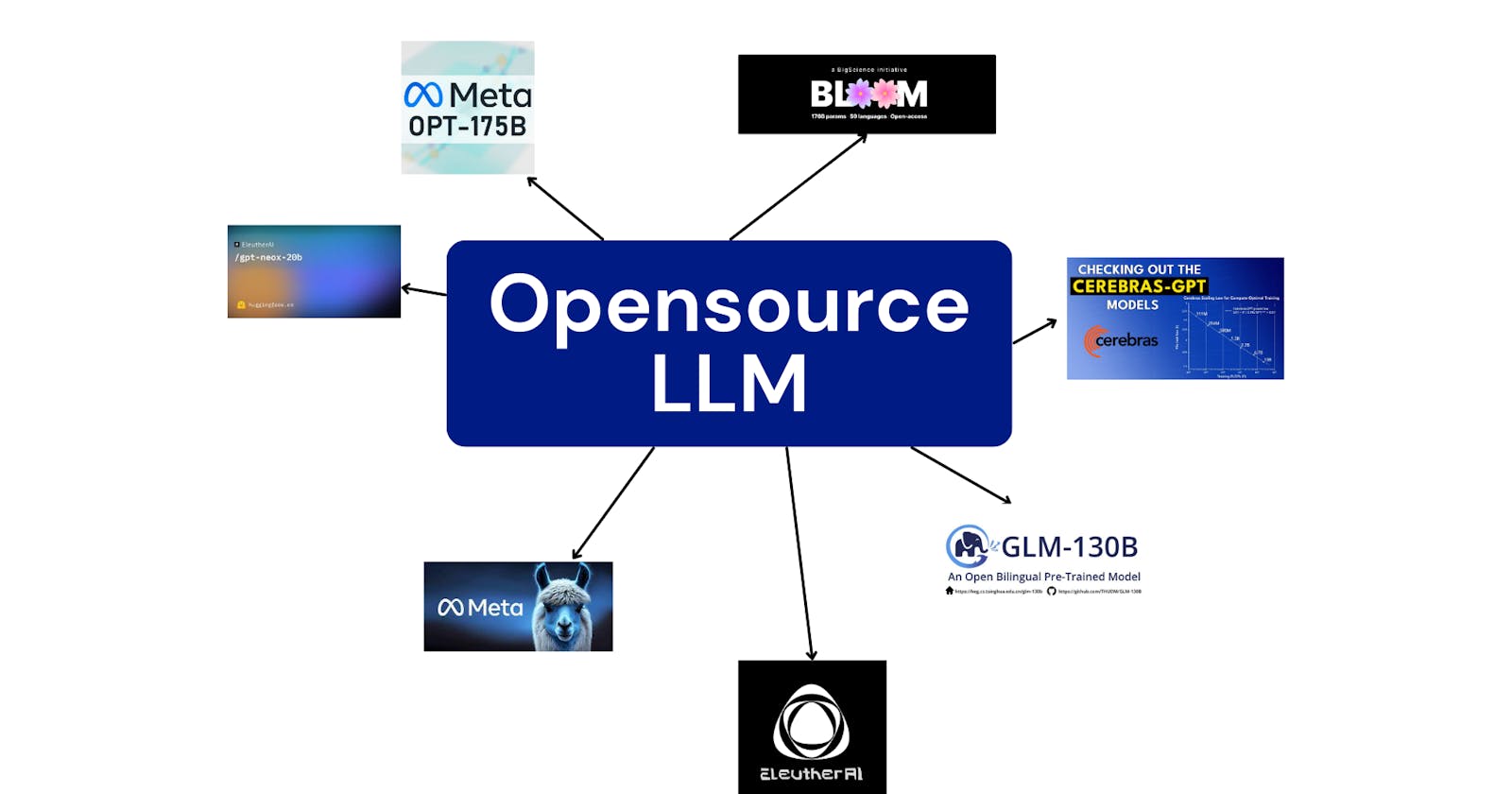

Here I discuss the most popular seven open-source large language models and the interesting projects that are built on top of them.

Opensource LLM

I have selected seven open-source LLMs with more than 10 billion parameters that show promising performance. I only consider models that have their weights available to the public. Some of these are a family of LLMs that come in different sizes. Let’s look at each of them in detail.

OPT

Open Pre-trained large language models are a suite of LLMs developed by Meta AI. The model architecture follows similar settings provided by Megatron-LM. OPT models are trained on data combined from Roberta corpus, the Pile, and Reddit data from PushShift.io which all predominantly contain English data. The data was deduplicated before training and the model has seen about 180b tokens during training. The context length of OPT models is 2048 tokens. OPT models range from 125m parameters to 175b parameters. Available parameter sizes are 125m, 350m, 1.3b, 2.7b, 6.7b, 13b,30b,66b, and 175b.

In one-shot and few-shot settings, performance across different datasets and tasks OPT 175b model performance is comparable to GPT3. It was also shown that OPT 175b is more likely to generate toxic responses than GPT-3. OPT model weights are available under a non-commercial license.

BLOOM

BLOOM is a decoder-only autoregressive language model with architecture modified from Megatron LM GPT-2. Bloom was developed in the BigScience workshop which was funded primarily by the French government. Bloom is trained on data from 46 languages and 13 programming languages. The model was trained on the ROOTS dataset (1.5TB). The context length of BLOOM models is 2048 tokens. The model has seen about 350B tokens during training. BLOOM was trained on 5 model sizes ranging from 560m parameters to 179B parameters. Available parameter sizes are Bloom 560m, Bloom 1B (2 versions), Bloom 3B, Bloom 7B, and Bloom 176B.

BLOOM was one of the first efforts for creating LLMs at scale with a high level of multilingualism. Its performance is reported to match with OPT models under 1 shot settings in benchmarks like SUPERGLUE, and it is not limited to the English language. Although personally, after trying out BLOOM I feel it might have been affected by the curse of multilingualism. BLOOM model weights are more open and can be used for building commercial applications.

Pythia

The Pythia language model suite is a collection of LLMs developed by EleutherAI to facilitate interpretability research. The models were trained using the GPT-NeoX library. The Pile dataset, which is a curation of English language datasets was used for pre-training. Models were trained on deduplicated and non-deduplicated versions of the Pile dataset. Each model has seen roughly 300b tokens during training. The context length of BLOOM models is 2048 tokens. Pythia models range from 70m parameters to 12b parameters each of which is available in two versions. Available parameter sizes are 70m, 160m, 410m, 1b, 1.4b, 2.8b, 6.9b, and 12b. You can download all of these from the official repo.

Pythia modes display very similar performance to BLOOM and OPT models of similar size. The model weights are available in huggingface under Apache 2.0 license which permits commercial and non-commercial usage.

GLM 130b

GLM 130b is a bilingual (English and Chinese) large language model developed at Tsinghua University, China. The model uses the same decoder only an autoregressive transformer with slight changes. It also includes multi-task instruction pre-training along with normal self-supervised learning. The pre-training data includes the 1.2T Pile dataset and the Chinese 1T Wudao Corpora. The model has seen roughly 400b tokens during pre-training. The maximum context length of this model is 2048 tokens. They also released an INT4 quantized version with minimal performance degradation.

The GLM-130B model demonstrates superior performance compared to GPT-3 175B across various widely-used English benchmarks. However, this advantage is not evident when compared to OPT-175B and BLOOM-176B. Compared to GPT-3 Davinci GLM 130b has displayed a lower level of toxicity.

GPTNeoX-20B

GPTNexoX-20B is a large language model developed by GPT-NeoX-20B. The model uses GPT-3 like architecture with notable changes like replacing positional embeddings with rotary embeddings. The model was pre-trained using the Pile dataset and has seen about 400b tokens during training. The maximum context length of this model is 2048 tokens.

It was reported that GPT-NeoX-20B is a particularly powerful few-shot reasoner and gains far more in performance when evaluated five-shot than a similarly sized GPT-3 model. The model weights are freely available through a permissive license.

LLAMA

LLAMA is a suite of large language models developed and open-sourced by the FAIR team at Meta AI. The model uses the same decoder only an autoregressive transformer but with changes inspired by GPTNeo-X, PaLM, and GPT-3. LLAMA models are trained on a mix of data collected from CCNet, C4, GitHub, Wikipedia, Books, ArXiv, and Stack Exchange. This corpus includes data from 20 languages. The training procedure follows the chinchilla scaling rules (highest accuracy for a given compute budget) and even smaller models are trained on more than 1 trillion tokens. The context length of LLAMA models is 2048 tokens. LLAMA models are available in 7B parameters, 13B, 33B, and 65 B.

LLAMA 13b outperforms GPT-3 on most benchmarks and LLAMA 65b is competitive with the latest models like Chinchilla 70b on a variety of tasks. LLAMA model weights are available under a non-commercial license.

Cerebras GPT

Cerebras GPT is a suite of large language models developed and released by Cerbras. The model uses GPT-3 like architecture with minor modifications. The Pile dataset is used for model pre-training. The models in this family are trained according to chinchilla scaling laws hence different model sizes are exposed to a different number of tokens. The largest model (13b parameters) has seen about 257b tokens during training. The maximum context length of this model is 2048 tokens. Cerebras GPT models are available in 111m parameters, 256m, 590m, 1.3b, 2.7b, 6.7b, and 13b.

Cerebras GPT 13b shows better average downstream tasks accuracy when compared with OPT and Pythia models of similar size but fails to perform better in any other model sizes. The model is released under Apache 2.0 license.

Evaluation

It is very difficult to compare all these models due to the unavailability of scores for each dataset.

I have aggregated scores from different research papers to help you get an idea of the model’s performance under zero-shot settings. This might be overwhelming to compare. If you’re here to see how open-source LLMs are catching up with OpenAI some of the models like OPT 175b, Llama 65, etc have reported performance similar to GPT-3 in zero-shot settings.

| BLOOM 176B | Pythia 12b | GLM 130 | LLAMA 65 | LLAMA 13b | LLAMA 33b | Celebras GPT 13b | OPT 176b | OPT 13b | OPT 33b | OPT 66b | GPTNeoX 20b | |

| LAMBADA | 67.2 | 71.2 | 80.2 | 69.6 | 74.7 | 72.0 | ||||||

| HellaSwag | 50.5 | 84.2 | 82.8 | 79.2 | 51.3 | 75.2 | 52.4 | 72.3 | 74.5 | 53.8 | ||

| MMLU | 29.9 | 34.4 | 63.4 | 46.9 | 57.8 | 31.8 | 27.6 | 27.6 | ||||

| BoolIQ | 0.704 | 0.784 | 85.3 | 78.1 | 83.1 | 0.793 | 0.76 | 0.683 | ||||

| TruthfulQA | 0.205 | 0.218 | 0.57 | 0.47 | 0.52 | 0.25 | 0.201 | 0.216 |

Fortunately for us, this task is going to be much easier in the future with Stanford's new Helm project to evaluate LLMs. Please check out the website for further insights.

How to choose a foundation model?

There are no hard and fast rules for choosing the right foundation model to fine-tune for your application. If you’re looking to pick an open LLM to fine-tune your own dataset, I would recommend trying out new ones that follow the chinchilla scaling rules such as Llama models. Another way to select LLMs for task-specific fine-tuning is to compare the model’s performance on the reported benchmarks to which your task is mostly related. For example, if you’re looking to build an LLM that can solve math problems really well, you could compare different raw LLM performances on GM8k to select the best-performing model. Also, keep in mind the larger model size will lead to an increase in inference latency and cost. If you're building something and wish to brainstorm, my DMs are open:)

Opensource projects

Opensource LLMs have been increasingly used in many open-source projects. Here I am describing some of the most popular ones,

Alpaca: A strong instruction following LLM using LLAMA

Alpaca-LoRa: Code for reproducing Alpaca using low-rank adaptation.

Open-Assistant: OA also has done instruction fine-tuning + RLHF on LLAMA and Pythia models.

Colossal AI: a ChatGPT-type model with a complete RLHF pipeline based on LLama.

**Vicuna: A fine-tuned version of the LLAMA model that matches GPT-4 performance.

koala: LLAMA 13b fine-tuned on internet dialogues.

BLOOM-LoRA: Instruction fine-tuning on BLOOM models.

Petals: Distributed fine-tuning for BLOOM models.

alpaca.cpp: Run alpaca models locally using cpp implementation.

GPT4all: Demo, data, and code to train your own GPT model using LLAMA.

Cerebras lora alpaca : Cerebras GPT fine-tuned on alapaca LoRa.

Dalai: provides the simplest way to run Llama models locally.

Conclusion

In conclusion, large language models have the potential to revolutionize our everyday lives, and it is crucial that we ensure open access to these technologies. By promoting an open source, we can foster innovation that can ultimately bring about positive impacts on society. I hope this blog has provided you with valuable insights into some of the top open large language models and projects currently available. Stay updated on my latest AI content and blogs by following me on Twitter.